MHR, Regular Distributions and Myerson’s Lemma

Monotone Hazard Rate (MHR) distributions and its superclass regular distributions keep appearing in the Mechanism Design literature and this is due to a very good reason: they are the class of distributions for which Myerson’s Optimal Auction is simple and natural. Let’s brief discuss some properties of those distributions. First, two definitions:

- Hazard rate of a distribution

:

- Myerson virtual value of a distribution

:

We can interpret the hazard rate in the following way: think of as a random variable that indicates the time that a light bulb will take to extinguish. If we are in time

and the light bulb hasn’t extinguished so far, what is the probability it will extinguish in the next

time:

We say that a distribution is monotone hazard rate, if is non-decreasing. This is very natural for light bulbs, for example. Many of the distributions that we are used to are MHR, for example, uniform, exponential and normal. The way that I like to think about MHR distributions is the following: if some distribution has hazard rate

, then it means that

. If we define

, then

, so:

From this characterization, it is simple to see that the extremal distributions for this class, i.e. the distributions that are in the edge of being MHR and non-MHR are constant hazard rate, which correspond to the exponential distribution for

. They way I like to think about those distributions is that whenever you are able to prove something about the exponential distribution, then you can prove a similar statement about MHR distributions. Consider those three examples:

Example 1: for MHR distributions. This fact is straightforward for the exponential distribution. For the exponential distribution

and therefore

but the proof for MHR is equally simple: Let , therefore

.

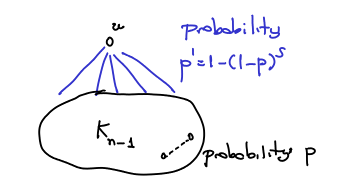

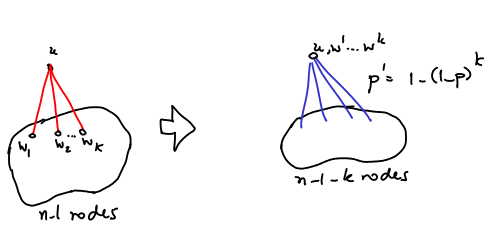

Example 2: Given iid where

is MHR and

and

, then

. The proof for the exponential distribution is trivial, and in fact, this is tight for the exponential, the trick is to use the convexity of

. We use that

in the following way:

Since , we have that

. This way, we get:

Example 3: For MHR distributions, there is a simple lemma that relates the virtual value and the real value and this lemma is quite useful in various settings: let , then for

,

. Again, this is tight for exponential distribution. The proof is quite trivial:

Now, MHR distributions are a subclass of regular distributions, which are the distributions for which Myerson’s virtual value is a monotone function. I usually find harder to think about regular distributions than to think about MHR (in fact, I don’t know so many examples that are regular, but not MHR. Here is one, though, called the equal-revenue-distribution. Consider

distributed according to

. The cumulative distribution is given by

. The interesting thing of this distribution is that posted prices get the same revenue regardless of the price. For example, if we post any price

, then a customer with valuations

buys the item if

by price

, getting revenue is

. This can be expressed by the fact that

. I was a bit puzzled by this fact, because of Myerson’s Lemma:

Myerson Lemma: If a mechanism sells to some player that has valuation

with probability

when he has value

, then the revenue is

.

And it seemed that the auctioneers was doomed to get zero revenue, since . For example, suppose we fix some price

and we sell the item if

by price

. Then it seems that Myerson’s Lemma should go through by a derivation like that (for this special case, although the general proof is quite similar):

but those don’t seem to match, since one side is zero and the other is 1. The mistake we did above is classic, which is to calculate . We wrote:

but both are infinity! This made me realize that Myerson’s Lemma needs the condition that , which is quite a natural a distribution over valuations of a good. So, one of the bugs of the the equal-revenue-distribution is that

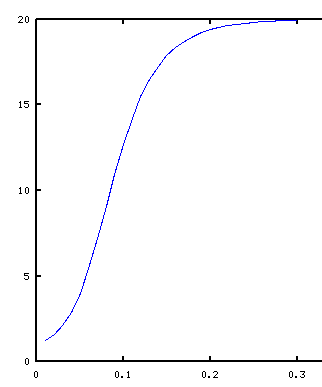

. A family that is close to this, but doesn’t suffer this bug is:

for

, then

. For

we have

, then we get

.