Consistent Labeling

This week, me and Igor are presenting the paper “Metric clustering via consistent labeling” by Krauthgamer and Roughgarden in our Algorithms Reading Group. To prepare for the presentation, I thought that writting a blog post about it was a nice idea. Consistent Labeling is a framework that allows us to represent a variety of metric problems, as computing a separating decomposition of a metric space, a padded decomposition (both which are the main ingredient of embedding metric spaces into dominating trees), sparse cover, metric triangulation and so on. Here, I’ll define the Consistent Labeling Problem, formulate it as an Integer Program and show how we can get an approximation algorithm to it by rounding its linear relaxation.

First, consider a base set . We want to attribute labels in

to the elements of

respecting some constraints. First, each element

has associated with it a subset

of labels it can be assigned. Each element can receive at most

labels. Second, there is a collection

of subsets of

, so that for each

, there must be one label

that is assigned to all elements in

. For each element in

, there is a penalty

to violate this constraint.

Our goal is to find a probability distribution over labelings that minimizes the total penalty . Let’s formulate this is an integer program. For that, the first thing we need are decision variables: let

be a

-variable indicating if label

is assigned to element

. Let the variable

mean that the label

was assigned to all elements in

and let

mean that set

is consistently labeled. A linear programming formulation can be therefore expressed as:

It is not hard to see that if are

-variables then the formulation corresponds to the original problem. Now, let’s relax it to a Linear Program and interpret those as probabilities. The rounding procedure we use is a generalization of the one in “Approximation algorithms for classification problems” of Kleinberg and Tardos: until every object has

labels, repeat the following procedure: pick

and

and assign label

to all objects with

. In the end, pick the

first labels assigned to each object.

What we just described is a procedure that, given a solution to the relaxed LP, produces a randomized labeling of the objects. Now, we need to prove that the solution produced by this randomized labeling is good in expectation, that is, that is good compared to the optimal single deterministic assignment. The authors prove that they are within a factor of

, where

.

Theorem 1 For each

, the probability that

is consistently labeled is lower bounded by

.

Proof: Since we are trying to lower bound the probability of getting consistently labeled, we can consider just the probability of all the elements in to be consistently labeled in the same iteration – let’s estimate this probability. This is:

If is chosen in iteration

, all elements are labeled with

if

for all

, so the probability is

. So, we have:

Now, let be the probability that set

is hit by the labeling in phase

. If label

is chosen, the set

is hit by the labeling if

, therefore:

inverting the order of the summation, we get:

The probability that is consistently labeled is smaller greater than the probability that it is consistently labeled in the same iteration before the set is hit

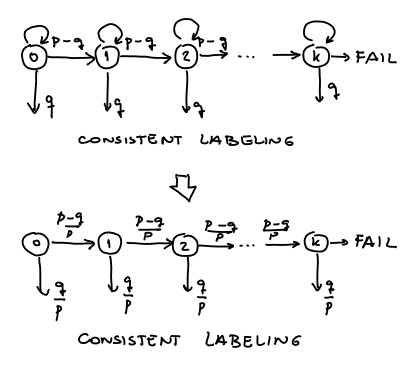

times. In one iteration three things may happen: either the set is not hit, or it is hit but it is not consistently labeled or it is consistently labeled. The figure below measures how many times the set is hit. The down arrows represent the event that the set

is consistently labeled:

A standard trick in this cases is to disregard the self-loops and normalize the probabilities. This way the probability that is consistently labeled is:

Now, we just use that and

to obtain the desired result.

The approximation factor of follows straight from the previous theorem by considering the following inequalities:

and noting that the LP solution is a lower bound of the optimal value

Theorem 2 The rounding procedure gives a randomized algorithm that, in expectation, achieves a

approximation to the optimal consistent labeling.